Table of Contents

Introduction to Language Models

Ever wondered what are Language models and how they work? Language Models have completely transformed how we interact with technology in recent years. I remember my first encounter with a chatbot back in 2018 – it was clunky, constantly misunderstood me, and responded with obviously templated answers. Fast forward to today, and I’m having nuanced conversations with AI systems that sometimes leave me forgetting I’m not chatting with a human!

These incredible systems are at the core of modern AI, enabling machines to understand, process, and generate human-like text in ways that seemed impossible just a decade ago. The journey from basic rule-based natural language processing to the advanced models we have today – like GPT-4o, Claude 3.5 Sonnet, Gemini, and LLaMA 3 – represents one of the most significant technological leaps I’ve witnessed in my lifetime.

What amazes me most is how these language models have quietly infiltrated nearly every industry. From my doctor using AI to summarize patient notes to my favorite news site generating article summaries, these tools are everywhere once you start looking for them.

In this guide, I’ll walk you through how language models actually work under the hood, trace their fascinating evolution, explore the most important AI models shaping our world today, and show concrete examples of how they’re being applied across various fields.

What is NLP and Machine Learning? Their Role in Language Models

What is NLP (Natural Language Processing)?

Natural Language Processing might sound like complex tech jargon, but I promise it’s more straightforward than it seems. NLP is essentially the field of AI that enables computers to interpret, understand, and respond to human language – whether that’s text or speech.

I first became fascinated with NLP when I realized my spam filter was actually “reading” my emails to determine what was junk. Mind-blowing! Today, NLP powers so much more than spam filters – it’s the technology behind chatbots, translation services, sentiment analysis tools, and text summarization systems.

The real breakthrough with NLP came when researchers figured out how to help computers understand context and nuance in language. Trust me, that’s no small feat! I still remember trying to use early translation tools that would give you hilariously wrong results because they translated words literally without understanding idioms or cultural references.

What is Machine Learning (ML)?

Machine Learning changed everything about how we approach AI. Instead of programming explicit rules (which I tried doing once for a simple chatbot and nearly lost my mind after writing hundreds of if-then statements), ML allows AI models to learn from data without requiring explicit programming for every possible scenario.

There are several flavors of ML that power different aspects of language models:

- Supervised Learning is what I first experimented with when building a basic sentiment classifier. You feed the system examples of text labeled as “positive” or “negative,” and it learns to recognize patterns associated with each emotion.

- Unsupervised Learning was harder for me to wrap my head around initially. These systems find patterns in unlabeled data – like grouping news articles by topic without being told what the topics should be.

- Reinforcement Learning felt like training my stubborn dog when I first encountered it. The AI learns through trial and error, getting “rewards” for good outputs and “penalties” for poor ones.

How NLP and ML Power Language Models

The magic happens when you combine these technologies. ML algorithms train NLP models by processing massive amounts of text data – we’re talking billions of books, articles, websites, and conversations.

I remember the excitement in the AI community when Transformers arrived on the scene. These neural network architectures revolutionized NLP with their self-attention mechanisms that could process relationships between words regardless of how far apart they appeared in a sentence.

Large Language Models (LLMs) represent the culmination of deep learning techniques combined with advanced NLP approaches and sophisticated ML training methods. What makes them so powerful is their ability to generate context-aware text that considers not just the immediate words but the broader meaning and intent behind communication.

What Are Language Models?

Language models blew my mind the first time I truly understood what they were doing. At their core, they’re AI systems trained to predict and generate text by analyzing patterns in massive datasets.

Think about it like this – have you ever had your phone suggest the next word as you’re typing a message? That’s a simple language model in action! I’m still amazed when my phone accurately predicts exactly what I was going to say next.

Types of Language Models:

- Statistical Models – These older models use probability for predictions. N-grams, which I learned about in my first NLP class, look at sequences of words and calculate the probability of what comes next. They’re kinda like that game where you try to predict the end of someone’s sentence.

- Neural Models – These use deep learning for much better accuracy. I remember the first time I tested a transformer-based model – the quality jump was mind-blowing compared to the statistical models I’d been playing with.

- Large Language Models (LLMs) – These advanced behemoths are trained on trillions of words. The first time I interacted with GPT-3, I honestly got goosebumps – it felt like I was witnessing the future unfold right in front of me.

What Are Large Language Models (LLMs)?

LLMs are the heavy hitters of the AI world – massive models trained on incredibly diverse datasets to generate human-like text. My first experience with an LLM left me slightly unsettled – how could a machine write something that sounded so… human?

Features of LLMs:

- They understand context, intent, and meaning in ways earlier models simply couldn’t. I’ve watched them maintain the thread of a conversation through multiple complex topics without losing track.

- They generate coherent, logical responses that actually make sense in context. Gone are the days of nonsensical AI outputs that I used to laugh at.

- They learn from billions of parameters – though I still struggle to truly comprehend what that means in practice. The scale is just mind-boggling.

- They improve via fine-tuning and reinforcement learning – kinda like how I got better at writing through practice and feedback, just way faster.

Examples of Popular LLMs:

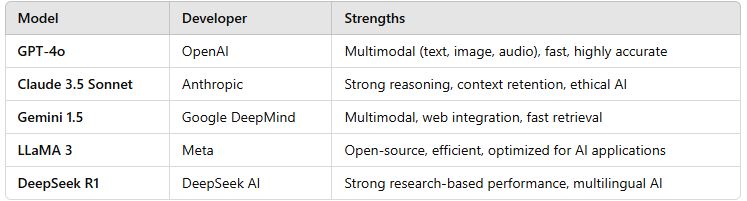

GPT-4o, Claude 3.5 Sonnet, Gemini 1.5, LLaMA 3, and DeepSeek R1 are the current stars of the show. I’ve spent countless hours testing each of them, and the differences between their outputs can be subtle but meaningful depending on your use case.

How Do Language Models Work?

Understanding how these models actually work took me months of reading research papers and watching tutorials. Let me break down the key components in plain English:

Key Components of a Language Model

Tokenization – This is just fancy talk for splitting text into words or subwords. I was surprised to learn that most models don’t actually process whole words but rather chunks of characters called tokens.

Embeddings – These convert words into numerical data that the model can understand. When I first visualized word embeddings and saw how “king” minus “man” plus “woman” approximately equals “queen,” I had a real “aha!” moment about how machines conceptualize language.

Attention Mechanisms – This brilliant innovation helps models understand relationships between words. I think of it like how your brain can instantly connect related concepts even if they appear far apart in a text.

Training & Fine-Tuning – LLMs learn from massive datasets initially, then adapt to specific tasks. I’ve fine-tuned smaller models myself, and the improvement in performance for specialized tasks can be dramatic.

Example: How GPT-4o Generates Text

Let’s say the input is: “AI will change the future by…”

The model analyzes patterns it’s seen in similar contexts and predicts the most likely continuation based on its training. The process happens in milliseconds, yet involves billions of calculations.

Output: “AI will change the future by enhancing automation, creativity, and efficiency.”

What’s wild is that behind this simple prediction are layers upon layers of mathematical operations that somehow capture the essence of human language. Still blows my mind!

The Evolution of Language Models: From Rule-Based NLP to LLMs

1. Early NLP Approaches

- Rule-Based Models – These followed predefined grammar rules. Anyone remember ELIZA from the 1960s? I played with a recreation once and laughed at how it just rearranged your statements into questions.

- Statistical Models – These used probability-based text prediction. Markov Chains and N-grams were my introduction to computational linguistics, and I remember being impressed at how effective simple statistical approaches could be.

2. Neural Network Revolution

The neural network revolution changed everything about how we approach language modeling:

- Recurrent Neural Networks (RNNs) enabled sequence-based text generation but had frustrating memory limitations. I spent weeks trying to get an RNN to generate coherent paragraphs, only to watch it forget the context after a few sentences.

- Long Short-Term Memory (LSTM) networks improved memory retention but were computationally expensive. My poor laptop would overheat just trying to train a simple LSTM model!

3. The Rise of Transformers & LLMs

- Transformers burst onto the scene in 2017 with Google’s landmark paper “Attention Is All You Need.” I remember reading it and thinking it seemed interesting but had no idea it would completely revolutionize the field.

- The LLM Boom (2020-Present) has been absolutely wild to witness. OpenAI’s GPT models, Meta’s LLaMA, and Google’s Gemini have transformed what we thought was possible in AI text generation. I went from being impressed by a model that could write a coherent paragraph to models that can write entire books, code complex programs, and have nuanced discussions about philosophy.

Best Large Language Models in 2025

There are so many impressive models out there now, each with their strengths. I’ve spent way too much time testing all of them (seriously, my partner jokes that I talk to AI more than humans these days). Here’s a breakdown of how I use the leading AI models and what makes each one stand out

1. GPT-4o: The All-Rounder for Everyday AI Tasks

GPT-4o is my go-to for general AI applications. It’s fast, reliable, and excels at a wide range of tasks, from content generation to coding assistance.

- Daily utility – I use it almost every day for drafting emails, brainstorming ideas, and refining written content.

- Coding support – It’s great for debugging code, offering explanations, and even generating snippets.

- Natural conversations – The responses feel fluid and human-like, making it easy to interact with.

If you need an AI that can handle diverse tasks with a strong balance of speed and quality, GPT-4o is a solid choice.

2. Claude 3.5: The Contextual Thinker for Long Documents

Claude 3.5 shines in scenarios where deep context retention is key. If you’re analyzing complex documents or need a model that maintains coherence across long conversations, this is a strong contender.

- Long-context comprehension – I’ve tested it on lengthy reports, and it keeps track of details impressively well.

- Ethical AI applications – It tends to be more cautious in responses, making it suitable for tasks requiring responsible AI behavior.

- Clear, structured explanations – When working through complex topics, it breaks things down in an easy-to-follow manner.

For research-heavy work or legal and compliance-related tasks, Claude 3.5 is a powerful tool.

3. Gemini 1.5: The Researcher’s AI with Web-Powered Insights

What sets Gemini 1.5 apart is its ability to incorporate real-time web information, making it an excellent choice for research.

- Up-to-date knowledge – While other models rely on pre-trained data, Gemini 1.5 can fetch real-time insights.

- Great for market research – I’ve used it to gather the latest trends and competitor analysis.

- Fact-checking and verification – If you need to ensure accuracy in a fast-changing field, it’s invaluable.

If you’re in journalism, business intelligence, or academic research, Gemini 1.5 can be a game-changer.

4. LLaMA 3: The Open-Source Innovator

For developers and AI enthusiasts looking for customization, LLaMA 3 is a standout option. I’ve been experimenting with fine-tuning it for niche applications, and the results have been promising.

- Fully open-source – Unlike proprietary models, LLaMA 3 gives you full control over modifications.

- Great for AI customization – I’ve used it to tailor AI models for specific industry needs.

- Flexible and community-driven – The open-source community constantly improves it, making it a rapidly evolving tool.

If you’re into AI development or need an adaptable model for specialized use cases, LLaMA 3 is worth exploring.

5. DeepSeek R1: The Specialist for Technical Research

DeepSeek R1 has proven particularly useful for research applications that require specialized knowledge.

- Precision in niche domains – It excels at highly technical or academic inquiries.

- Efficient data synthesis – I’ve found it helpful in summarizing complex topics into digestible insights.

- Reliable for expert-level tasks – If you need an AI model that understands advanced concepts, this is a strong choice.

For those working in scientific research, engineering, or specialized fields, DeepSeek R1 delivers targeted, high-quality responses.

In Summary

GPT-4o excels at general AI tasks. I use it almost daily for everything from drafting emails to helping debug code.

Claude 3.5 stands out for ethical AI applications and long-context analysis. I’ve been impressed with its ability to analyze lengthy documents while maintaining coherence.

Gemini 1.5 shines with its web-powered capabilities. The way it can incorporate real-time information makes it particularly useful for research.

LLaMA 3 is perfect for open-source projects. I’ve been tinkering with fine-tuning it for specific domains and the results have been promising.

DeepSeek R1 has become my go-to for research applications that require specialized knowledge.

Real-World Applications of Language Models

The applications of these models have expanded far beyond what I initially imagined possible:

AI Writing & Content Creation tools like Jasper have transformed how content gets produced. I’ve used them to help overcome writer’s block and generate first drafts that I can then refine.

Code Generation & Debugging tools like Blackbox have saved countless hours. The first time it accurately completed a complex function I was writing, I literally said “wow” out loud.

AI-Powered Search & Research platforms like Perplexity have changed how I find information. Traditional search engines now feel clunky and outdated in comparison.

Automated Customer Support systems have improved dramatically. Remember those frustrating chatbots that could never understand what you wanted? That era is thankfully ending.

Challenges & Ethical Considerations

Despite all the progress, we’re still grappling with significant challenges:

Bias & Fairness Issues remain persistent problems. LLMs reflect biases in their training data, and I’ve seen firsthand how this can lead to problematic outputs.

Misinformation & AI Hallucination continue to be thorny issues. I’ve caught models confidently stating completely fabricated information – which is especially dangerous when they sound so authoritative.

Data Privacy & Security concerns keep me up at night sometimes. There’s always the risk of AI models leaking sensitive information from their training data.

High Energy Consumption for training these models is staggering. The environmental impact of developing ever-larger models is something we can’t ignore. I’ve started prioritizing more efficient models in my own work as a result.

The Future of Language Models

Looking ahead, I’m excited about several developing trends:

AI that understands emotions & human intent better is on the horizon. The current generation still sometimes misses emotional subtext that would be obvious to humans.

Smaller, more efficient models for on-device AI will make these tools more accessible and private. I’ve been testing some compact models that run entirely on my phone with impressive results.

Advanced multimodal AI integrating video, text, and speech will create more natural interfaces. I recently saw a demo of a system that could analyze and discuss the content of videos, and it felt like a glimpse into the future.

Greater focus on ethical AI & AI regulation is both necessary and inevitable. The conversations around responsible AI development have matured considerably in the past few years.

Conclusion & Next Steps

Language models have evolved from simple rule-based NLP systems to powerful AI-driven models like GPT-4o, Claude 3.5, and Gemini 1.5. The pace of innovation has been breathtaking, and I expect it to continue accelerating.

These powerful LLMs now power AI applications in business, research, and everyday life, but they come with ethical challenges we’re still learning to navigate. I’ve seen both the incredible potential and concerning pitfalls firsthand.

As AI continues to advance, models will become more efficient, reliable, and human-like in understanding and generating language. The challenge for all of us – developers, users, and society at large – is to harness these tools responsibly while mitigating their risks.

I’m excited to see where this technology goes next, but more importantly, I’m committed to being part of the conversation about how we use it ethically and effectively. The future isn’t just happening to us – we’re building it together, one prompt at a time.

Author

Agastya is the founder of LabelsDigital.com, a platform committed to delivering actionable, data-driven insights on AI, web tools, and passive income strategies. With a strong background in entrepreneurship, web software, and AI-driven technologies, he cuts through the noise to provide clear, strategic frameworks that empower businesses and individuals to thrive in the digital age. Focused on practical execution over theory, Agastya leverages the latest AI advancements and digital models to help professionals stay ahead of industry shifts. His expertise enables readers to navigate the evolving digital landscape with precision, efficiency, and lasting impact. He also offers consultancy services, helping turn innovative ideas into digital reality.

View all posts